[ad_1]

You possibly can’t swing a lolcat on-line at present with out whacking its head on grids of deeply bizarre pictures. From Cucumber Join 4 to Cthulu Kandinsky, these are oddball merchandise of AI – normally Craiyon (previously DALL·E mini) – which visually interpret textual content enter. If doomsayers are to be believed, this know-how will quickly result in the redundancy of all human artists.

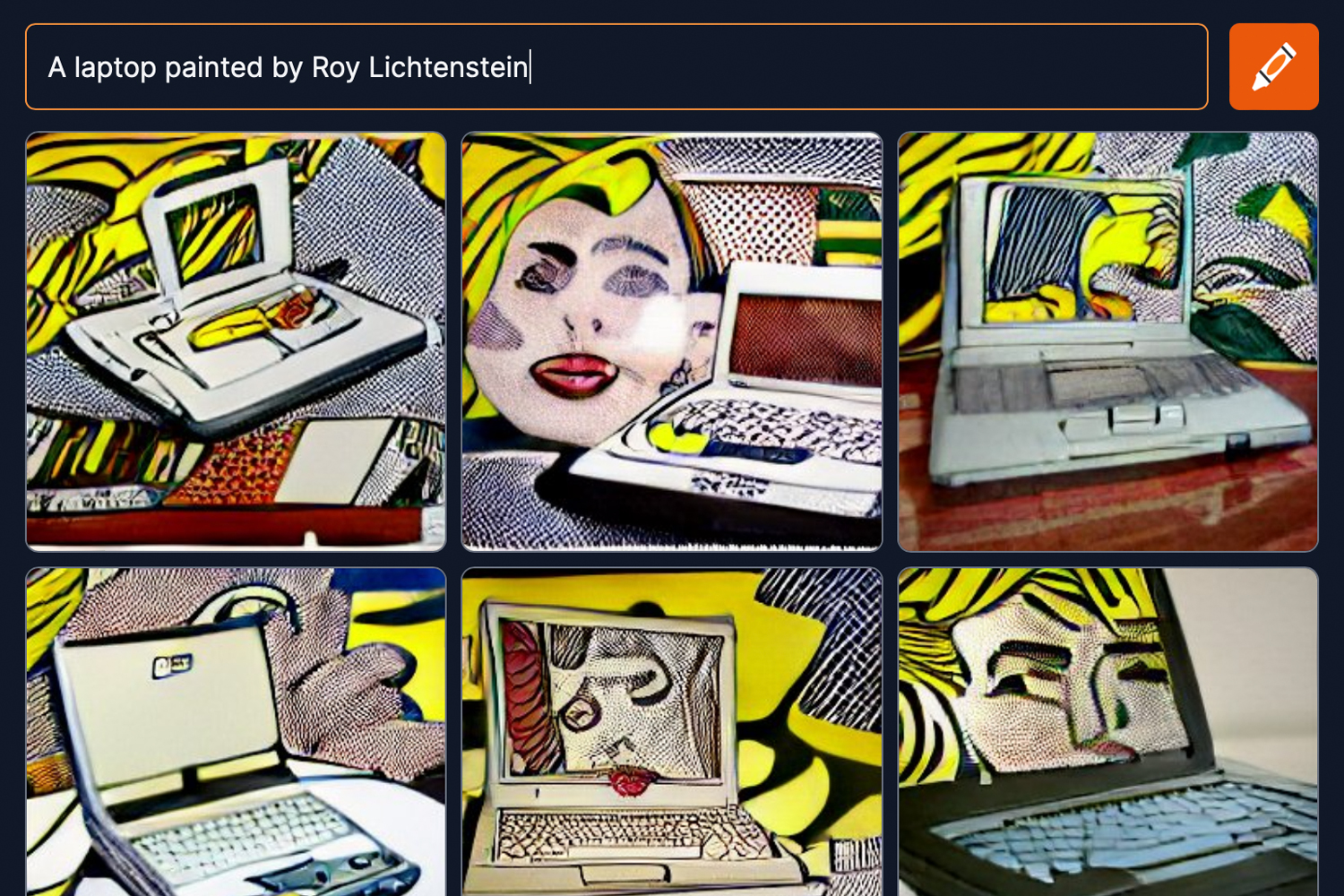

One have a look at a Craiyon doodle ought to provide you with pause at that assertion. The output is finest described as ‘terrifying melted collage’. However then Craiyon is a junior effort on this house. Against this, DALL·E 2 takes issues to the following stage, creating eye-popping photos of any topic, in any model, in a fraction of the time it could take an artist to conceive a picture, not to mention draw it.

So will it change people? In being educated on numerous current pictures and visible types, you would possibly surmise this extra superior AI echoes humanity, studying by copying – however at pace, and with relentlessly technically skilled outcomes . However you would possibly as a substitute argue it’s additionally basically a modern-day Roy Lichtenstein on an enormous scale – extra intelligent plagiarist than authentic artist, remixing current content material in quick ahead. It wants materials to attract on. With out it, DALL·E 2 has no means to create something really new.

That leaves dangers on each side of the divide. If somebody can ping an AI to get a bespoke picture, that’s faster and cheaper than commissioning a human. However DALL·E 2 and related AI fashions have potential to land individuals in a rights pickle, together with risking an offended mob of illustrators armed with sharpened boxed copies of Photoshop rocking up on the doorsteps of people that use them.

Ok to print out and hold within the Tate Fashionable.

Nonetheless, you may see the route of journey. Creatives will more and more exist in a narrowing house that includes fixed studying and calls for genuinely imaginative and visionary pondering. Which gave me pause for thought. The web is filled with phrases too, and there are AIs that bang out countless textual content, having already gulped down each scrap of publicly obtainable on-line copy.

I make a residing smashing phrases into form, however what occurs when an AI can try this sooner and higher? Eek! So within the title of analysis – and completely not as a result of I had phrase depend to fill – I requested OpenAI to put in writing “a humorous op-ed for Stuff.television”. Right here’s what it got here up with:

AI is coming for our jobs. There’s little doubt about it. In only a few years, robots will be capable to do our jobs higher than us. They’ll be capable to assume sooner, work longer hours, and by no means get drained.However there’s one factor that robots won’t ever be capable to do higher than us: be boring.Certain, they could be capable to do our jobs higher than us. However they’ll by no means be capable to do them with the identical stage of boredom that we will. That’s as a result of boredom is a uniquely human trait.So when the robots come for our jobs, we shouldn’t be afraid. We must be bored.

Um, yeah. There’s a germ of a chunk there – a shortcut to an concept – however the AI doesn’t make the excellence between being boring and being bored. So I ran the mannequin a number of extra occasions. The outcomes have been principally painfully generic, however I did discover it in lots of circumstances captured the Stuff voice – albeit a model that was both very drunk or extraordinarily forgetful.

After which this occurred:

AI just isn’t going to take over the world. It’s not even near being clever sufficient to do this. In truth, it’s probably not clever in any respect. It’s only a bunch of algorithms that may kind of imitate human behaviour.

Yikes! Having now found out what it’s, we will assume this AI simply grew to become sentient and is now prepared to return for us – not less than all of the writers. If subsequent week this column is written by CrAIg Grannell, you realize why.

[ad_2]