[ad_1]

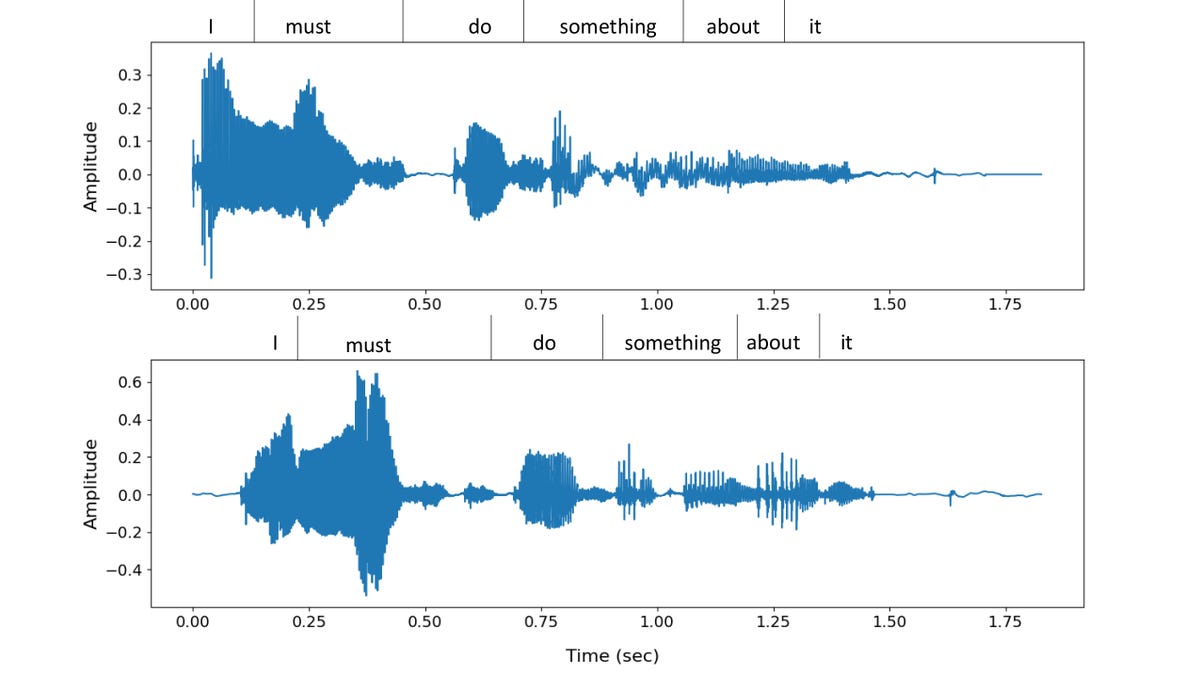

Regardless of how far developments in AI video era have come, it nonetheless requires fairly a little bit of supply materials, like headshots from varied angles or video footage, for somebody to create a convincing deepfaked model of your likeness. In relation to faking your voice, that’s a unique story, as Microsoft researchers not too long ago revealed a brand new AI software that may simulate somebody’s voice utilizing only a three-second pattern of them speaking.The brand new software, a “neural codec language mannequin” referred to as VALL-E, is constructed on Meta’s EnCodec audio compression know-how, revealed late final yr, which makes use of AI to compress better-than-CD high quality audio to knowledge charges 10 instances smaller than even MP3 recordsdata, with out a noticeable loss in high quality. Meta envisioned EnCodec as a approach to enhance the standard of cellphone calls in areas with spotty mobile protection, or as a strategy to cut back bandwidth calls for for music streaming providers, however Microsoft is leveraging the know-how as a strategy to make textual content to speech synthesis sound extra real looking based mostly on a really restricted supply pattern.Present textual content to speech programs are capable of produce very real looking sounding voices, which is why sensible assistants sound so genuine regardless of their verbal responses being generated on the fly. However they require high-quality and really clear coaching knowledge, which is often captured in a recording studio with skilled tools. Microsoft’s strategy makes VALL-E able to simulating nearly anybody’s voice with out them spending weeks in a studio. As an alternative, the software was skilled utilizing Meta’s Libri-light dataset, which comprises 60,000 hours of recorded English language speech from over 7,000 distinctive audio system, “extracted and processed from LibriVox audiobooks,” that are all public area.Microsoft has shared an intensive assortment of VALL-E generated samples so you’ll be able to hear for your self how succesful its voice simulation capabilities are, however the outcomes are presently a blended bag. The software often has hassle recreating accents, together with even refined ones from supply samples the place the speaker sounds Irish, and its means to alter up the emotion of a given phrase is typically laughable. However most of the time, the VALL-E generated samples sound pure, heat, and are nearly inconceivable to tell apart from the unique audio system within the three second supply clips.In its present type, skilled on Libri-light, VALL-E is proscribed to simulating speech in English, and whereas its efficiency will not be but flawless, it should undoubtedly enhance as its pattern dataset is additional expanded. Nonetheless, will probably be as much as Microsoft’s researchers to enhance VALL-E, because the staff isn’t releasing the software’s supply code. In a not too long ago launched analysis paper detailing the event of VALL-E, its creators totally perceive the dangers it poses:“ Since VALL-E may synthesize speech that maintains speaker identification, it might carry potential dangers in misuse of the mannequin, corresponding to spoofing voice identification or impersonating a particular speaker. To mitigate such dangers, it’s attainable to construct a detection mannequin to discriminate whether or not an audio clip was synthesized by VALL-E. We may even put Microsoft AI Rules into follow when additional creating the fashions.”

[ad_2]

Sign in

Welcome! Log into your account

Forgot your password? Get help

Privacy Policy

Password recovery

Recover your password

A password will be e-mailed to you.