[ad_1]

Nocturnal predators have an ingrained superpower: even in pitch-black darkness, they’ll simply survey their environment, homing in on tasty prey hidden amongst a monochrome panorama.

Looking on your subsequent supper isn’t the one perk of seeing at nighttime. Take driving down a rural dust highway on a moonless evening. Bushes and bushes lose their vibrancy and texture. Animals that skitter throughout the highway turn out to be shadowy smears. Regardless of their sophistication throughout daylight, our eyes wrestle to course of depth, texture, and even objects in dim lighting.

It’s no shock that machines have the identical drawback. Though they’re armed with a myriad of sensors, self-driving vehicles are nonetheless attempting to reside as much as their title. They carry out effectively below excellent climate situations and roads with clear site visitors lanes. However ask the vehicles to drive in heavy rain or fog, smoke from wildfires, or on roads with out streetlights, they usually wrestle.

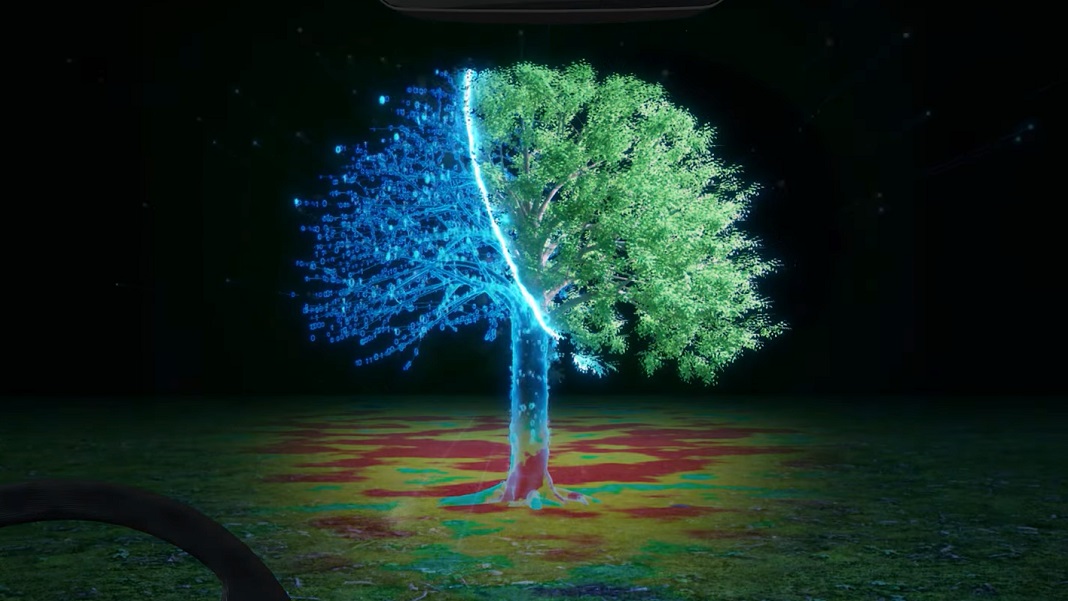

This month, a staff from Purdue College tackled the low visibility drawback head-on. Combining thermal imaging, physics, and machine studying, their know-how allowed a visible AI system to see at nighttime as if it had been daylight.

On the core of the system are an infrared digicam and AI, educated on a customized database of pictures to extract detailed data from given environment—basically, instructing itself to map the world utilizing warmth indicators. In contrast to earlier techniques, the know-how, known as heat-assisted detection and ranging (HADAR), overcame a infamous stumbling block: the “ghosting impact,” which normally causes smeared, ghost-like pictures hardly helpful for navigation.

Giving machines evening imaginative and prescient doesn’t simply assist with autonomous autos. An analogous method may additionally bolster efforts to trace wildlife for preservation, or assist with long-distance monitoring of physique warmth at busy ports as a public well being measure.

“HADAR is a particular know-how that helps us see the invisible,” stated research writer Xueji Wang.

Warmth Wave

We’ve taken loads of inspiration from nature to coach self-driving vehicles. Earlier generations adopted sonar and echolocation as sensors. Then got here Lidar scanning, which makes use of lasers to scan in a number of instructions, discovering objects and calculating their distance primarily based on how briskly the sunshine bounces again.

Though highly effective, these detection strategies include an enormous stumbling block: they’re arduous to scale up. The applied sciences are “energetic,” which means every AI agent—for instance, an autonomous car or a robotic—might want to always scan and accumulate details about its environment. With a number of machines on the highway or in a workspace, the indicators can intrude with each other and turn out to be distorted. The general degree of emitted indicators may additionally doubtlessly injury human eyes.

Scientists have lengthy seemed for a passive various. Right here’s the place infrared indicators are available. All materials—residing our bodies, chilly cement, cardboard cutouts of individuals—emit a warmth signature. These are readily captured by infrared cameras, both out within the wild for monitoring wildlife or in science museums. You may need tried it earlier than: step up and the digicam exhibits a two-dimensional blob of you and the way totally different physique elements emanate warmth on a brightly-colored scale.

Sadly, the ensuing pictures look nothing such as you. The sides of the physique are smeared, and there’s little texture or sense of 3D house.

“Thermal footage of an individual’s face present solely contours and a few temperature distinction; there aren’t any options, making it seem to be you’ve seen a ghost,” stated research writer Dr. Fanglin Bao. “This lack of data, texture, and options is a roadblock for machine notion utilizing warmth radiation.”

This ghosting impact happens even with probably the most subtle thermal cameras attributable to physics.

You see, from residing our bodies to chilly cement, all materials sends out warmth indicators. Equally, the complete atmosphere additionally pumps out warmth radiation. When attempting to seize a picture primarily based on thermal indicators alone, ambient warmth noise blends with sounds emitted from the article, leading to hazy pictures.

“That’s what we actually imply by ghosting—the shortage of texture, lack of distinction, and lack of awareness inside a picture,” stated Dr. Zubin Jacob, who led the research.

Ghostbusters

HADAR went again to fundamentals, analyzing thermal properties that basically describe what makes one thing scorching or chilly, stated Jacob.

Thermal pictures are made from helpful knowledge streams mixed in. They don’t simply seize the temperature of an object; in addition they comprise details about its texture and depth.

As a primary step, the staff developed an algorithm known as TeX, which disentangles the entire thermal knowledge into helpful bins: texture, temperature, and emissivity (the quantity of warmth emitted from an object). The algorithm was then educated on a customized library that catalogs how totally different gadgets generate warmth indicators throughout the sunshine spectrum.

The algorithms are embedded with our understanding of thermal physics, stated Jacob. “We additionally used some superior cameras to place all of the {hardware} and software program collectively and extract optimum data from the thermal radiation, even in pitch darkness,” he added.

Our present thermal cameras can’t optimally extract indicators from thermoimages alone. What was missing was knowledge for a type of “coloration.” Much like how our eyes are biologically wired to the three prime colours—crimson, blue, and yellow—the thermo-camera can “see” on a number of wavelengths past the human eye. These “colours” are important for the algorithm to decipher data, with lacking wavelengths akin to paint blindness.

Utilizing the mannequin, the staff was capable of dampen ghosting results and acquire clearer and extra detailed pictures from thermal cameras.

The demonstration exhibits HADAR “is poised to revolutionize laptop imaginative and prescient and imaging know-how in low-visibility situations,” stated Drs. Manish Bhattarai and Sophia Thompson, from Los Alamos Nationwide Laboratory and the College of New Mexico, Albuquerque, respectively, who weren’t concerned within the research.

Late-Night time Drive With Einstein

In a proof of idea, the staff pitted HADAR in opposition to one other AI-driven laptop imaginative and prescient mannequin. The sector, primarily based in Indiana, is straight from the Quick and the Livid: late evening, low mild, outside, with a picture of a human being and a cardboard cutout of Einstein standing in entrance of a black automotive.

In comparison with its rival, HADAR analyzed the scene in a single swoop, discerning between glass rubber, metal, cloth, and pores and skin. The system readily deciphered human versus cardboard. It may additionally detect depth notion no matter exterior mild. “The accuracy to vary an object within the daytime is identical…in pitch darkness, in case you’re utilizing our HADAR algorithm,” stated Jacob.

HADAR isn’t with out faults. The primary trip-up is the value. Based on New Scientist, the complete setup is not only cumbersome, however prices greater than $1 million for its thermal digicam and military-grade imager. (HADAR was developed with the assistance of DARPA, the Protection Superior Analysis Tasks Company recognized for championing adventurous ventures.)

The system additionally must be calibrated on the fly, and may be influenced by quite a lot of environmental components not but constructed into the mannequin. There’s additionally the difficulty of processing pace.

“The present sensor takes round one second to create one picture, however for autonomous vehicles we’d like round 30 to 60 hertz body price, or frames per second,” stated Bao.

For now, HADAR can’t but work out of the field with off-the-shelf thermal cameras from Amazon. Nonetheless, the staff is raring to carry the know-how to the market within the subsequent three years, lastly bridging mild to darkish.

“Evolution has made human beings biased towards the daytime. Machine notion of the longer term will overcome this long-standing dichotomy between day and evening,” stated Jacob.

Picture Credit score: Jacob, Bao, et al/Purdue College

[ad_2]