[ad_1]

Superior RAG Methods stand on the slicing fringe of synthetic intelligence, remodeling how machines perceive and work together with human language. These subtle strategies should not nearly making smarter chatbots or extra intuitive search engines like google and yahoo; they’re reshaping our expectations of know-how. By integrating huge quantities of exterior data seamlessly, superior retrieval-augmented technology (RAG) techniques provide a glimpse into an AI-powered future the place solutions should not simply correct however contextually wealthy.

The leap from primary fashions to those superior strategies marks a big evolution within the subject of AI. This comes after years of digging deep and constructing from the bottom as much as push previous previous hurdles – assume quick consideration spans or hit-and-miss details discovering – that used to journey up how machines get what we’re saying.

Understanding Retrieval-Augmented Era (RAG)

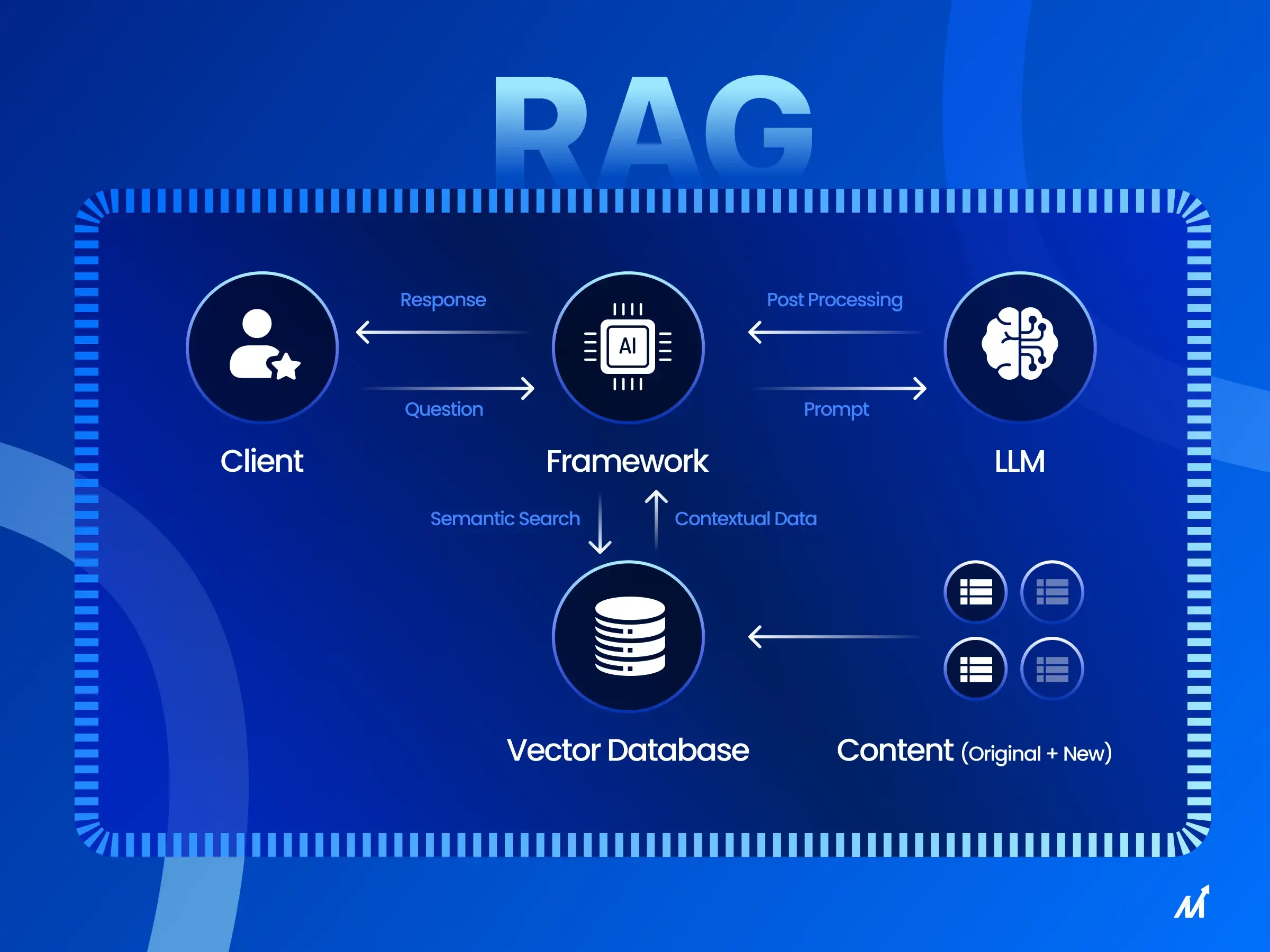

Retrieval-augmented technology (RAG) is a strong approach that mixes the strengths of conventional language fashions with the huge data saved in exterior databases. It’s a game-changer on the planet of AI and pure language processing.

RAG techniques differ from conventional language fashions of their capacity to entry and make the most of data from a a lot bigger pool of information. This enables them to generate extra correct, related, and contextually wealthy responses to consumer queries.

RAG vs. Conventional Language Fashions

Conventional language fashions rely solely on the knowledge saved of their parameters throughout coaching. Whereas this enables them to generate coherent textual content, they’re restricted by the data they have been uncovered to through the coaching course of.

RAG, however, can entry exterior data bases through the technology course of. Which means RAG fashions can draw upon an unlimited array of data to tell their outputs, resulting in extra correct and informative responses.

The Position of Vectors and Vector Databases

Vector databases are a vital part of RAG techniques. They allow environment friendly retrieval of related paperwork by representing each the consumer question and the paperwork within the data base as high-dimensional vectors.

The similarity between these vectors might be rapidly calculated, permitting the RAG system to determine probably the most related paperwork to the consumer’s question. This course of, generally known as vector search, is way sooner and extra scalable than conventional keyword-based search strategies.

Retrieval Programs in AI Functions

Retrieval techniques have gotten more and more essential in a variety of AI functions. From question-answering techniques to chatbots and content-generation instruments, the power to rapidly entry and make the most of related data is crucial.

RAG strategies are notably well-suited to functions that require entry to massive quantities of exterior data. By leveraging the facility of vector databases and superior retrieval strategies, RAG techniques can present extra correct and informative responses to consumer queries.

Because the demand for clever, knowledge-driven AI techniques continues to develop, retrieval techniques and RAG strategies will doubtless play an more and more essential position within the improvement of cutting-edge AI functions.

Forms of RAG Architectures

A number of totally different approaches to constructing RAG techniques exist, every with its personal strengths and weaknesses. On this part, we’ll discover three of the commonest RAG architectures: Naive RAG, Superior RAG, and Modular RAG.

Naive RAG

Naive RAG is the best method to constructing a RAG system. On this structure, the mannequin retrieves a hard and fast variety of paperwork from the data base primarily based on their similarity to the consumer’s question. These paperwork are then concatenated with the question and fed into the language mannequin for technology.

Whereas Naive RAG might be efficient for easy queries, it has some limitations. The fastened variety of retrieved paperwork can result in both inadequate or extreme context, and the mannequin might battle to determine probably the most related data inside the retrieved paperwork.

Superior RAG Methods

Superior RAG strategies purpose to deal with the restrictions of Naive RAG by incorporating extra subtle retrieval and technology mechanisms. These might embody question growth, the place further phrases are added to the consumer’s question to enhance retrieval accuracy or iterative retrieval, the place the mannequin retrieves paperwork in a number of levels to refine the context.

Superior RAG techniques may additionally make use of strategies like consideration mechanisms to assist the mannequin give attention to probably the most related elements of the retrieved paperwork throughout technology. By selectively attending to totally different elements of the context, the mannequin can generate extra correct and contextually related responses.

Modular RAG Pipelines

Modular RAG pipelines break down the retrieval and technology course of into separate, specialised parts. This enables for larger flexibility and customization of the RAG system to swimsuit particular software wants.

A typical modular RAG pipeline may embody levels for question growth, retrieval, reranking, and technology, every dealt with by a devoted module. This modular method permits for the usage of specialised fashions or strategies at every stage, doubtlessly resulting in improved total efficiency.

Modular RAG pipelines additionally make it simpler to experiment with totally different configurations and determine bottlenecks or areas for enchancment inside the system. By optimizing every module independently, builders can create extremely environment friendly and efficient RAG techniques tailor-made to their particular use case.

Key Takeaway:

RAG strategies supercharge AI by pulling data from huge data bases, making responses smarter and extra on-point. From easy to modular setups, they tailor solutions with precision, remodeling how machines perceive us.

Optimizing RAG Efficiency

Optimizing the efficiency of an RAG system is essential for delivering correct and related responses to consumer queries. A number of strategies might be employed to boost retrieval accuracy, response high quality, and total system effectivity.

Sentence-Window Retrieval

Sentence-window retrieval focuses on retrieving smaller, extra focused chunks of textual content, corresponding to particular person sentences or quick passages, moderately than complete paperwork. This method helps scale back noise and enhance the relevance of the retrieved context, in the end resulting in extra correct generated responses.

By breaking down the doc retrieval course of into smaller models, the RAG system can higher determine probably the most pertinent data for answering the consumer’s question. This technique is especially efficient for dealing with advanced queries that require particular particulars or details.

Retriever Ensembling and Reranking

Retriever ensembling entails combining a number of retrieval fashions to enhance total retrieval accuracy. By leveraging the strengths of various retrieval approaches, corresponding to BERT or semantic search, the RAG system can extra successfully determine probably the most related paperwork.

Reranking strategies are then utilized to additional refine the retrieved outcomes primarily based on further standards, corresponding to relevance scores or range. This ensures that probably the most informative and numerous set of paperwork is chosen for the next response technology stage.

Response Era and Synthesis

Response technology in RAG techniques entails integrating the retrieved context with the unique consumer question to supply a coherent and informative reply. Superior strategies, corresponding to consideration mechanisms and content material planning, are employed to give attention to probably the most related elements of the retrieved textual content and guarantee a logical move of data within the generated response.

By leveraging the facility of enormous language fashions (LLMs), RAG techniques can synthesize the retrieved data right into a pure and contextually related response. This subtle method permits RAG techniques to deal with a various vary of reasoning duties and supply correct solutions primarily based on the accessible data.

Data Refinement

Data refinement strategies purpose to enhance the standard and relevance of the knowledge retrieved by the RAG system. This will contain strategies corresponding to entity linking, which identifies and disambiguates named entities inside the retrieved context, and data graph integration, which contains structured data to boost the retrieval course of.

By refining the retrieved data, RAG techniques can present extra exact and informative responses to consumer queries. That is notably essential for domains that require a excessive degree of accuracy, corresponding to healthcare or finance, the place the reliability of the generated solutions is important.

Implementing Superior RAG with LlamaIndex and LangChain

LlamaIndex and LangChain are two in style open-source libraries that supply highly effective instruments for constructing superior RAG techniques. These libraries present a variety of options and optimizations to streamline the event course of and enhance the efficiency of RAG functions.

Indexing Optimization

LlamaIndex provides numerous indexing strategies to optimize the retrieval course of in RAG techniques. One such approach is hierarchical indexing, which organizes the data base right into a tree-like construction, enabling sooner and extra environment friendly retrieval of related paperwork.

One other indexing optimization accessible in LlamaIndex is vector quantization, which compresses the vector representations of paperwork to cut back storage necessities and enhance search pace. By leveraging these indexing optimizations, builders can construct scalable RAG techniques able to dealing with massive data bases.

Retrieval Optimization

LangChain offers a versatile and modular framework for constructing retrieval pipelines in RAG techniques. It provides a variety of retrieval strategies, corresponding to semantic search and question growth, which might be simply built-in and experimented with to optimize retrieval efficiency.

LangChain additionally helps integration with in style vector databases, corresponding to Pinecone and Elasticsearch, enabling environment friendly storage and retrieval of vector representations. By leveraging LangChain’s retrieval optimization capabilities, builders can fine-tune their RAG techniques to attain excessive retrieval accuracy and effectivity.

Submit-Retrieval Optimization

Submit-retrieval optimization strategies give attention to refining the retrieved data earlier than passing it to the response technology stage. LlamaIndex provides strategies corresponding to relevance suggestions, which permits the RAG system to iteratively refine the retrieval outcomes primarily based on consumer suggestions, enhancing the relevance of the retrieved context over time.

One other post-retrieval optimization approach is data filtering, which removes irrelevant or redundant data from the retrieved textual content. By making use of these optimizations, RAG techniques can present extra concise and focused responses to consumer queries.

CRAG Implementation

Corrective Retrieval-Augmented Era (CRAG) is a complicated RAG approach that goals to enhance the factual accuracy of generated responses. CRAG works by iteratively retrieving and integrating related data from the data base to right and refine the generated output.

LlamaIndex offers an implementation of CRAG, which has proven promising outcomes on benchmarks such because the MTEB leaderboard. By leveraging CRAG, builders can construct RAG techniques that generate extra correct and dependable responses, even for advanced queries that require a number of retrieval steps.

Constructing Graphs with LangGraph

LangGraph is a library that extends LangChain to help the development of information graphs for RAG techniques. By representing the data base as a graph, LangGraph permits extra subtle retrieval and reasoning capabilities, corresponding to multi-hop retrieval and graph-based inference.

This method is especially helpful for duties that require advanced reasoning over a number of items of data. With LangGraph, builders can construct RAG techniques that may navigate and extract insights from interconnected data, opening up new potentialities for superior question-answering and data discovery.

Key Takeaway:

Increase your RAG system’s efficiency by specializing in focused retrieval, combining fashions for accuracy, and refining responses with superior strategies. Use instruments like LlamaIndex and LangChain to optimize at each step, making certain extra exact solutions.

Addressing Limitations of Naive RAG Pipelines

One of many fundamental limitations of Naive RAG pipelines is the potential for retrieval errors, the place the mannequin fails to determine probably the most related data for a given question. To deal with this, researchers have explored strategies corresponding to question growth, the place the unique question is augmented with further phrases to enhance the retrieval accuracy, and semantic search, which matches past key phrase matching to seize the underlying that means of the question.

These strategies typically contain a deep dive into the data base, leveraging graph databases and superior indexing strategies to allow extra subtle retrieval methods. By enhancing the standard of the retrieved paperwork, these approaches can considerably improve the general efficiency of the RAG system.

Bettering Retrieval Accuracy

High quality-tuning the retrieval part is essential for enhancing the accuracy of the retrieved paperwork. This will contain strategies corresponding to studying higher doc representations, optimizing the similarity metrics used for retrieval, and incorporating related suggestions from customers or downstream duties.

One promising method is to make use of learning-to-rank strategies, which prepare the retrieval mannequin to optimize rating metrics corresponding to imply reciprocal rank. By straight optimizing for retrieval high quality, these strategies can considerably enhance the relevance of the retrieved paperwork.

Enhancing Response High quality

One other problem in Naive RAG is making certain the standard and coherence of the generated responses, notably when integrating data from a number of retrieved paperwork. Superior strategies corresponding to content material planning and knowledge ordering may help enhance the construction and move of the generated textual content.

Moreover, strategies like data filtering and redundancy removing may help scale back the quantity of irrelevant or repetitive data within the remaining output. By specializing in probably the most salient and informative content material, these strategies can improve the general high quality and usefulness of the generated responses.

Leveraging Exterior Data Bases

Whereas RAG techniques usually depend on a pre-defined data base, a wealth of further data is usually accessible from exterior sources corresponding to net searches or structured data bases. Incorporating this exterior data may help enhance the protection and accuracy of RAG techniques.

Methods corresponding to entity linking and data graph integration can allow RAG fashions to leverage these exterior sources successfully. By connecting the retrieved paperwork to broader data bases, these approaches can present further context and knowledge to boost the generated responses.

Incorporating Consumer Suggestions

Incorporating consumer suggestions is one other promising method for enhancing the efficiency of RAG techniques over time. By permitting customers to supply suggestions on the generated responses, corresponding to indicating whether or not the reply is right or related, the mannequin can be taught to refine its retrieval and technology methods.

This may be notably invaluable in domains the place the data base could also be incomplete or evolving over time. By constantly studying from consumer interactions, RAG techniques can adapt and enhance their efficiency primarily based on real-world utilization patterns and suggestions.

To judge the effectiveness of those strategies, researchers typically use metrics corresponding to faithfulness metrics, which assess the accuracy and trustworthiness of the generated responses. By measuring the alignment between the generated textual content and the retrieved paperwork, these metrics present a quantitative strategy to monitor enhancements in RAG efficiency.

How can Markovate assist with RAG Optimization?

Markovate provides a complete suite of instruments and companies particularly designed to boost the efficiency of RAG (Retrieval-Augmented Era) techniques. Our experience lies in offering superior options that leverage cutting-edge algorithms, knowledge constructions, and integration capabilities to optimize RAG workflows. Right here’s how Markovate may help with RAG optimization:

Indexing and Retrieval Capabilities:

We develop algorithms and knowledge constructions that type the spine of indexing and retrieval capabilities. These capabilities allow RAG techniques to swiftly and precisely retrieve related data from intensive data bases. Whether or not it’s by way of keyword-based search, semantic similarity evaluation, or hybrid approaches, Our crew trains RAG techniques to effectively entry the required knowledge. We constantly replace and refine the indexing strategies to make sure optimum efficiency in numerous situations.

Customizable RAG Pipelines:

Markovate offers a versatile framework for constructing RAG pipelines tailor-made to particular necessities and preferences. The modular structure and intensive API enable seamless integration of assorted retrieval methods, technology fashions, and post-processing strategies into RAG workflows. This degree of customization ensures that RAG techniques can adapt to numerous use instances and domain-specific wants. Markovate provides complete documentation and help to help firms in constructing and fine-tuning RAG pipelines in line with their distinctive specs.

Seamless Integration with LLMs (Giant Language Fashions):

We excel in seamlessly integrating state-of-the-art LLMs, corresponding to GPT-3 and BERT, into RAG techniques. By combining the facility of pre-trained language fashions with retrieval capabilities, we will create RAG techniques able to producing high-quality, contextually related responses. Markovate simplifies the mixing course of with simple setup procedures for incorporating LLMs into RAG pipelines.

General, Markovate serves as a strategic companion for organizations looking for to optimize their RAG techniques. By way of superior indexing and retrieval capabilities, customizable pipelines, and seamless integration with LLMs, Markovate builds RAG options that excel in delivering correct, contextually acceptable responses throughout numerous domains and functions.

Key Takeaway:

To spice up a RAG system’s efficiency, dive deep into the data base with question growth and semantic search. High quality-tune retrieval accuracy by way of learning-to-rank strategies and improve response high quality by organizing content material neatly. Don’t neglect to drag in exterior data sources for richer responses and use consumer suggestions for steady enchancment.

Conclusion

As we’ve journeyed by way of the realm of Superior RAG Methods right now, it’s clear that this isn’t your common tech improve. These strategies aren’t simply one other small step ahead; they’re fully altering the sport in how synthetic intelligence digs into and makes use of knowledge to make our lives simpler. From logistics optimizing their provide chains to digital assistants offering richer interactions, the impression is profound.

As research continued shedding mild on AI’s potential because the early Nineteen Sixties, it’s evident now greater than ever that good algorithms powered by superior RAG might very nicely be amongst humanity’s most useful companions.

I’m Rajeev Sharma, Co-Founder and CEO of Markovate, an revolutionary digital product improvement agency with a give attention to AI and Machine Studying. With over a decade within the subject, I’ve led key initiatives for main gamers like AT&T and IBM, specializing in cellular app improvement, UX design, and end-to-end product creation. Armed with a Bachelor’s Diploma in Pc Science and Scrum Alliance certifications, I proceed to drive technological excellence in right now’s fast-paced digital panorama.

[ad_2]