[ad_1]

Are you an Adobe Inventive Cloud person? It’s doable that photographs saved in your account there are getting used to coach Adobe’s AI. This was identified by the account for Krita, an utility used for creating digital artwork, which posted a involved tweet not too long ago. Apparently, customers opted in for Adobe’s AI picture evaluation by default. That is a part of their Privateness and Private Information phrases and circumstances. I actually don’t bear in mind doing this, so I did some digging round to see what the advantageous print was.

How Usually Do You Learn Ts & Cs?

I believe it was in highschool when a detailed buddy requested me if I ever intently learn the phrases and circumstances when putting in software program on my PC. Given how sluggish the machines have been again then and the way keen we have been to put in software program (learn: pc video games) on our methods, I doubt any of us truly learn the advantageous print. We didn’t even must scroll right down to the tip of the T&C. We simply clicked Settle for, and it proceeded to the set up. “Effectively, if nobody does, what’s stopping the software program corporations from having us signal over our financial savings and property to them as a part of the T&Cs?” he joked. We had chuckle about it again then.

However now, after I look again at it, I understand there’s loads we take without any consideration nowadays. Belief in software program corporations is one such idea. Think about a situation the place you could have an settlement along with your landlord. Underneath this settlement, he comes by as soon as a month to take footage of the home’s exterior for no matter purpose. This continues for some years. Till one advantageous day, you uncover close-up photographs of your self whereas inside the home. You then discover out your landlord took these. You by no means bear in mind agreeing to this, so that you confront him about it. He shrugs off any concern and claims this was a part of your most up-to-date leasing renewal contract. You most likely scribbled your signature swiftly to resume the tenancy contract, however that kind of rationalization would infuriate anybody, wouldn’t it?

The place’s THe Readability From Their Facet?

We don’t anticipate software program corporations, a lot much less well-established and world-leading ones, to do snarky issues behind our backs. We actually don’t anticipate them to place up their arms later and say, “Hey, you agreed to this.” Most of us skip via the phrases and circumstances when putting in software program. I imply, who has the time to undergo every line in there to see what we conform to. There’s a way of belief we place in these corporations: a perception that they gained’t take undue benefit of us, our belongings, and our privateness once we accomplish that. It’s impertinent that we’re knowledgeable clearly about such modifications, not simply have it assumed as a part of an present or up to date settlement. However has this actually been the case?

What’s All The Hullabaloo About?

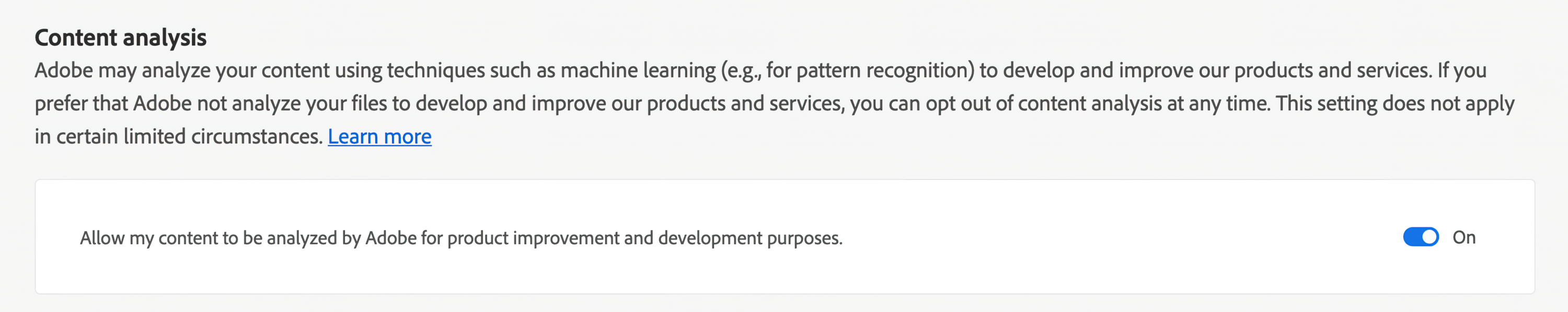

Adobe appears to be like to have been utilizing photographs and recordsdata from its Inventive Cloud customers, probably with out clearly letting its customers realize it’s doing so. It appears by agreeing to their Content material Evaluation clause beneath the Privateness and Private Information settings of your Inventive Cloud account, you’ve given them permission to take action. The web page doesn’t clearly say whether or not this evaluation is getting used to coach their AI fashions, however that turns into extra evident while you click on on their Study Extra hyperlink.

Machine Studying Content material Evaluation

Machine learning-enabled options may help you develop into extra environment friendly and artistic. For instance, we could use machine learning-enabled options that will help you manage and edit your photographs extra rapidly and precisely. With object recognition in Lightroom, we will auto-tag images of your canine or cat. In Photoshop, machine studying can be utilized to mechanically appropriate the attitude of a picture for you.

It appears to be like like Adobe is saying that solely your Cloud account information is analyzed. In a technique, that’s reassuring. Information on my desktop and smartphones with the Adobe apps put in are left alone.

How Is The Information Analyzed?

Information and recordsdata in your Adobe Cloud account are clubbed along with comparable content material from different customers. You’ll be able to instantly opt-out from any such information evaluation, besides beneath sure circumstances. These embrace if you happen to’re actively concerned within the Adobe Photoshop Enchancment Program, an energetic contributor to Adobe Inventory, or testing out their beta launch software program. What’s additionally on the finish of the FAQ web page is a line that claims, “…if you happen to use options that depend on content material evaluation strategies (for instance, Content material-Conscious Fill in Photoshop), your content material should still be analyzed while you use these options to assist enhance that function.”

That is complicated to me. If I choose out of the Content material Evaluation program, do I not get to make use of AI options in my apps? Or does this imply I can use them, however information and pictures utilizing AI-assisted edit options will probably be utilized by Adobe? What am I opting out of precisely?

Is My Id Non-public If I Choose-In?

You may be anxious that your cloud information is manually reviewed by workers there. Adobe has said that is solely the case in some exceptions.

Additionally they clearly state that the “insights obtained via content material evaluation is not going to be used to re-create your content material or result in figuring out any private data.”

“Adobe primarily makes use of machine studying in Inventive Cloud and Doc Cloud to investigate your content material. Machine studying describes a subset of synthetic intelligence by which a computing system makes use of algorithms to investigate and be taught from information with out human intervention to attract inferences from patterns and make predictions. The system could proceed to be taught and enhance over time because it receives extra information.”

We Ought To Clearly Learn Ts & Cs

Midjourney’s founder David Holz has already admitted to utilizing tens of millions of photographs with out in search of the house owners’ permission to coach its AI. Whereas considerably associated, you would argue that that is extra of a blatant copyright breach subject. Nevertheless it does beg the query of what corporations and organizations are doing to ethically practice their AI fashions whereas respecting the content material and copyright of people. Are we actually naive sufficient to imagine that software program corporations don’t use the information they’ve on you for their very own profit? Having stated that, it’s not fallacious to say we most likely anticipated extra from Adobe concerning this.

To Adobe’s credit score, their Photoshop Enchancment Web page clearly states that “clients aren’t signed as much as take part once they set up Photoshop 21.2 (June 2020 launch).” So did the tens of millions of its Inventive Cloud customers knowingly join their photographs for use by Adobe’s AI after this? There are a whole lot of ‘mays’ and ‘mights’ at this level. We’re nonetheless lacking readability on what information can be utilized to coach Adobe’s AI. Some may assume it isn’t honest for Adobe to make use of all these photographs at no cost.

Possibly we’re too lazy when upgrading our software program and never studying what we’re opting in to. I assume, as on a regular basis customers, we’d not instantly get some readability from Adobe on this. I’m curious to see if this complies with GDPR rules. Adobe’s subsequent transfer to state their intentions with our information utilization for his or her AI coaching needs to be thrilling to learn. How will they (in the event that they do) revise their phrases and circumstances?

[ad_2]