[ad_1]

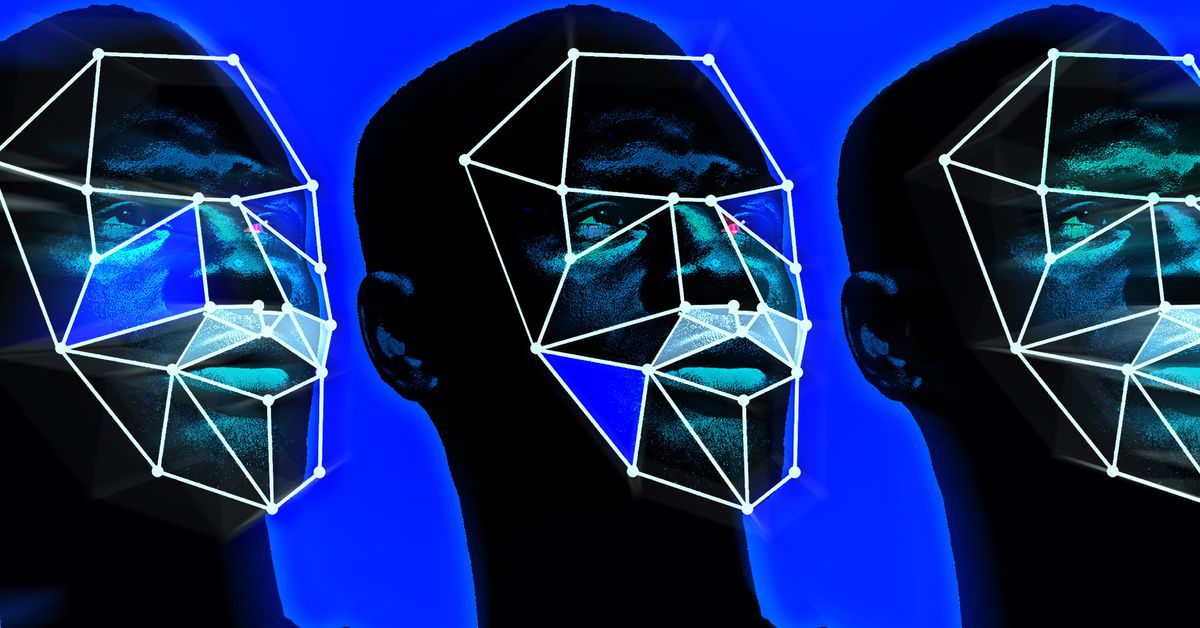

Controversial facial recognition agency Clearview AI has been ordered to destroy all pictures and facial templates belonging to people dwelling in Australia by the nation’s nationwide privateness regulator.

Clearview, which claims to have scraped 10 billion pictures of individuals from social media websites with a view to determine them in different pictures, sells its know-how to regulation enforcement companies. It was trialled by the Australian Federal Police (AFP) between October 2019 and March 2020.

Now, following an investigation, Australia privateness regulator, the Workplace of the Australian Info Commissioner (OAIC), has discovered that the corporate breached residents’ privateness. “The covert assortment of this type of delicate data is unreasonably intrusive and unfair,” stated OAIC privateness commissioner Angelene Falk in a press assertion. “It carries important threat of hurt to people, together with weak teams equivalent to kids and victims of crime, whose pictures will be searched on Clearview AI’s database.”

“When Australians use social media or skilled networking websites, they don’t anticipate their facial pictures to be collected with out their consent”

Mentioned Falk: “When Australians use social media or skilled networking websites, they don’t anticipate their facial pictures to be collected with out their consent by a industrial entity to create biometric templates for fully unrelated identification functions. The indiscriminate scraping of individuals’s facial pictures, solely a fraction of whom would ever be related with regulation enforcement investigations, might adversely affect the non-public freedoms of all Australians who understand themselves to be underneath surveillance.”

The investigation into Clearview’s practices by the OAIC was carried out along side the UK’s Info Commissioner’s Workplace (ICO). Nevertheless, the ICO has but to decide concerning the legality of Clearview’s work within the UK. The company says it’s “contemplating its subsequent steps and any formal regulatory motion which may be applicable underneath the UK knowledge safety legal guidelines.”

As reported by The Guardian, Clearview itself intends to attraction the choice. “Clearview AI operates legitimately based on the legal guidelines of its locations of enterprise,” Mark Love, a lawyer for the agency BAL Legal professionals representing Clearview, instructed the publication. “Not solely has the commissioner’s determination missed the mark on the way of Clearview AI’s method of operation, the commissioner lacks jurisdiction.”

Clearview argues that the pictures it collected have been publicly obtainable, so no breach of privateness occurred, and that they have been printed within the US, so Australian regulation doesn’t apply.

Around the globe, although, there’s rising discontent with the unfold of facial recognition programs, which threaten to remove anonymity in public areas. Yesterday, Fb mother or father firm Meta introduced it was shutting down the social platform’s facial recognition function and deleting the facial templates it created for the system. The corporate cited “rising issues about using this know-how as an entire.” Meta additionally lately paid a $650 million settlement after the tech was discovered to have breached privateness legal guidelines in Illinois within the US.

[ad_2]