[ad_1]

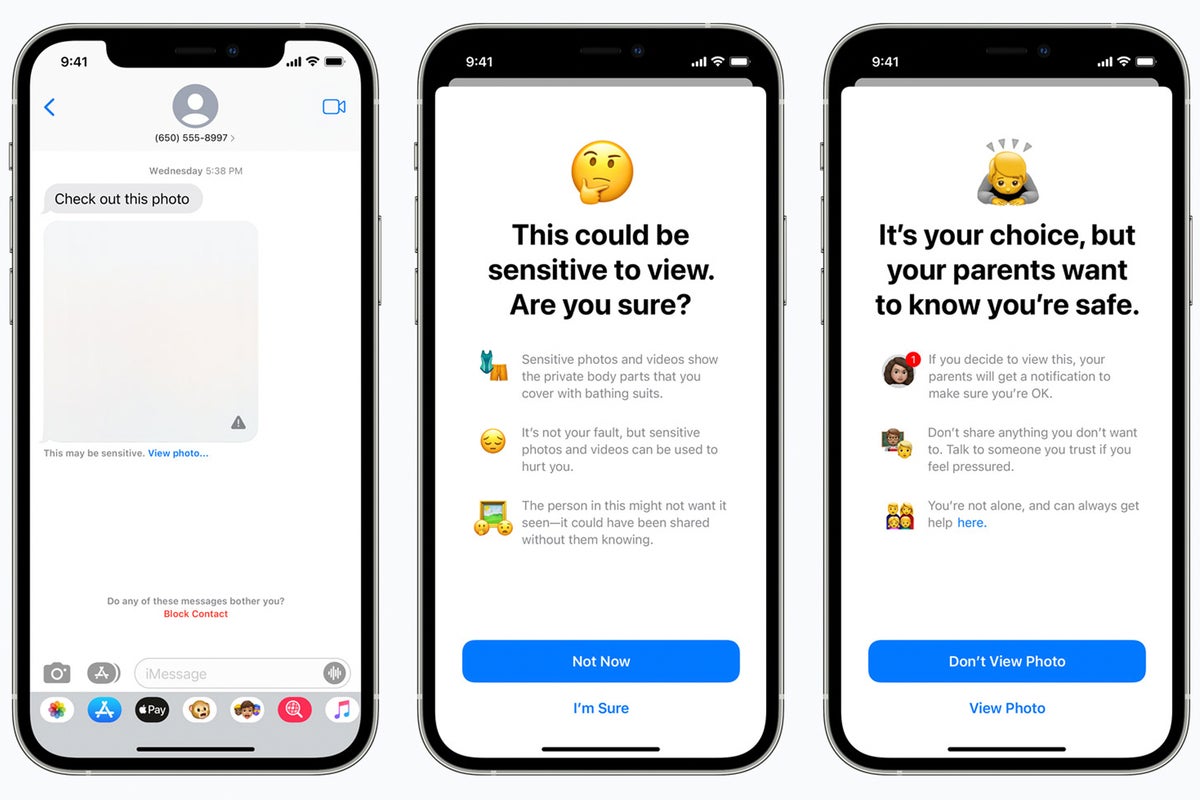

Apple seems to have stepped again on its least in style innovation because the Butterfly Keyboard, stealthily slicing mentions of its controversial CSAM scanning/surveillance tech from its web site following widespread criticism of the concept.Youngster safety toolsThe firm in August introduced plans to introduce ‘surveillance as a service’ on iPhones.At the moment, it revealed new communication security options now out there in iOS 15.2 and one other device – together with the capability to scan a consumer’s gadgets in opposition to a set of information to determine baby sexual abuse materials (CSAM). If such materials was found, the system flagged that consumer up for investigation.The response was instant. Privateness advocates throughout the planet shortly realized that in case your iPhone may scan your system for one factor, it may simply be requested to scan for an additional. They warned such know-how would develop into a Pandora’s field, open to abuse by authoritarian governments. Researchers additionally warned that the tech won’t work significantly nicely and could possibly be abused or manipulated to implicate harmless folks.Apple tried a appeal offensive, however it failed. Whereas some business watchers tried to normalize the scheme on the premise that all the pieces that occurs on the Web can already be tracked, most individuals remained totally unconvinced.A consensus emerged that by introducing such a system, Apple was intentionally or by accident ushering in a brand new period of on-device common warrantless surveillance that sat poorly beside its privateness promise. Tufts College professor of cybersecurity and coverage Susan Landau, stated: “It’s terribly harmful. It’s harmful for enterprise, nationwide safety, for public security and for privateness.”Whereas all critics agreed that CSAM is an evil, the worry of such instruments being abused in opposition to the broader inhabitants proved exhausting to shift. In September, Apple postponed the plan, saying: “Based mostly on suggestions from prospects, advocacy teams, researchers and others, we’ve got determined to take extra time over the approaching months to gather enter and make enhancements earlier than releasing these critically vital baby security options.”MacRumors claims all mentions of CSAM scanning have now been faraway from Apple’s Youngster Security Web page, which now discusses the communication security instruments in Messages and search protections. These instruments use on-device machine studying to determine sexually specific photographs and block such materials. Additionally they give youngsters recommendation in the event that they seek for such info. There was one change on this device: it now not alerts mother and father if their baby chooses to view such objects, partly as a result of critics had identified that this will likely pose dangers for some youngsters.It’s good that Apple has sought to guard youngsters in opposition to such materials, however it is usually good that it appears to have deserted this element, at the very least for now.I don’t imagine the corporate has utterly deserted the concept. It will not have come this far if it had not been absolutely dedicated to discovering methods to guard youngsters in opposition to such materials. I think about what it now seeks is a system that gives efficient safety however can’t be abused to hurt the harmless or prolonged by authoritarian regimes. The hazard is that having invented such a know-how within the first place, Apple will nonetheless probably expertise some governmental stress to utilize it.Within the brief time period, it already scans photographs saved in iCloud for such materials, a lot in keeping with what the remainder of the business already does.After all, contemplating the latest NSO Group assaults, high-profile safety scares, the weaponization and balkanization of content-driven “tribes” on social media and the tsunami of ransomware assaults plaguing digital enterprise, it’s straightforward to assume that know-how innovation has reached a zenith of sudden and deeply adverse penalties. Maybe we must always now discover the extent to which tech now undermines the fundamental freedoms the geeks within the HomeBrew Pc Membership initially sought to foster and shield?Please observe me on Twitter, or be a part of me within the AppleHolic’s bar & grill and Apple Discussions teams on MeWe.

Copyright © 2021 IDG Communications, Inc.

[ad_2]