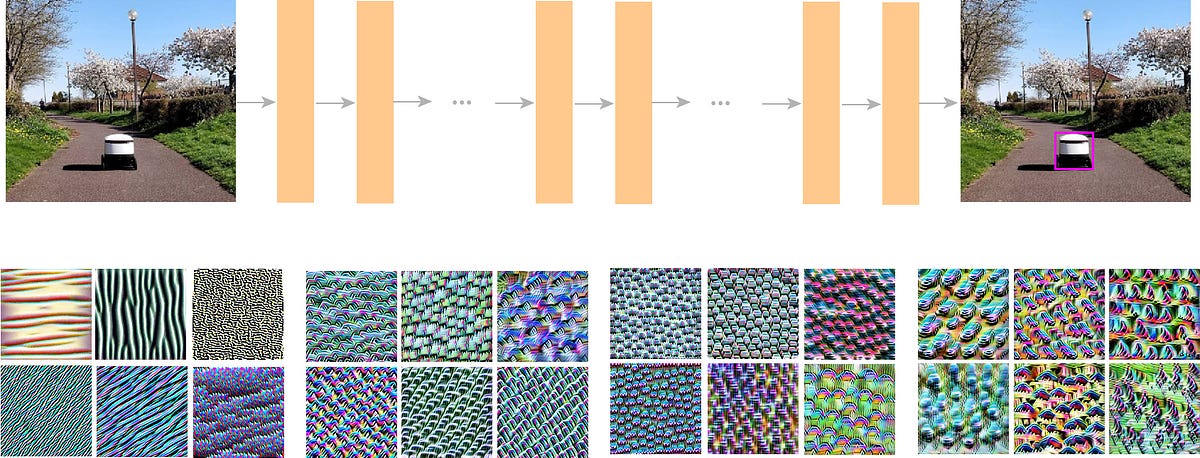

Starship is constructing a fleet of robots to ship packages regionally on demand. To efficiently obtain this, the robots have to be protected, well mannered and fast. However how do you get there with low computational assets and with out costly sensors similar to LIDARs? That is the engineering actuality you have to deal with except you reside in a universe the place prospects fortunately pay $100 for a supply.To start with, the robots begin by sensing the world with radars, a mess of cameras and ultrasonics.Nevertheless, the problem is that the majority of this information is low-level and non-semantic. For instance, a robotic might sense that an object is ten meters away, but with out understanding the thing class, it’s troublesome to make protected driving choices.Machine studying by way of neural networks is surprisingly helpful in changing this unstructured low-level knowledge into larger stage info.Starship robots principally drive on sidewalks and cross streets when they should. This poses a special set of challenges in comparison with self-driving vehicles. Site visitors on automotive roads is extra structured and predictable. Automobiles transfer alongside the lanes and don’t change route too usually whereas people incessantly cease abruptly, meander, may be accompanied by a canine on a leash, and don’t sign their intentions with flip sign lights.To grasp the encircling setting in actual time, a central part to the robotic is an object detection module — a program that inputs photos and returns a listing of object bins.That’s all very effectively, however how do you write such a program?A picture is a big three-dimensional array consisting of a myriad of numbers representing pixel intensities. These values change considerably when the picture is taken at night time as a substitute of daytime; when the thing’s coloration, scale or place modifications, or when the thing itself is truncated or occluded.Left — what the human sees. Proper — what the pc sees.For some advanced issues, instructing is extra pure than programming.Within the robotic software program, we’ve got a set of trainable models, principally neural networks, the place the code is written by the mannequin itself. This system is represented by a set of weights.At first, these numbers are randomly initialized, and this system’s output is random as effectively. The engineers current the mannequin examples of what they want to predict and ask the community to get higher the following time it sees the same enter. By iteratively altering the weights, the optimization algorithm searches for packages that predict bounding bins increasingly more precisely.Evolution of packages discovered by the optimization process.Nevertheless, one must assume deeply in regards to the examples which can be used to coach the mannequin.Ought to the mannequin be penalized or rewarded when it detects a automotive in a window reflection?What shall it do when it detects an image of a human from a poster?Ought to a automotive trailer filled with vehicles be annotated as one entity or every of the vehicles be individually annotated?These are all examples which have occurred while constructing the thing detection module in our robots.Neural community detects objects in reflections and from posters. A bug or a function?When instructing a machine, large knowledge is merely not sufficient. The info collected have to be wealthy and different. For instance, solely utilizing uniformly sampled photos after which annotating them, would show many pedestrians and vehicles, but the mannequin would lack examples of bikes or skaters to reliably detect these classes.The crew have to particularly mine for laborious examples and uncommon circumstances, in any other case the mannequin wouldn’t progress. Starship operates in a number of totally different nations and the various climate circumstances enriches the set of examples. Many individuals had been shocked when Starship supply robots operated in the course of the snowstorm ‘Emma’ within the UK, but airports and faculties remained closed.The robots ship packages in numerous climate circumstances.On the similar time, annotating knowledge takes time and assets. Ideally, it’s finest to coach and improve fashions with much less knowledge. That is the place structure engineering comes into play. We encode prior data into the structure and optimization processes to cut back the search house to packages which can be extra possible in the actual world.We incorporate prior data into neural community architectures to get higher fashions.In some pc imaginative and prescient functions similar to pixel-wise segmentation, it’s helpful for the mannequin to know whether or not the robotic is on a sidewalk or a street crossing. To supply a touch, we encode world image-level clues into the neural community structure; the mannequin then determines whether or not to make use of it or not with out having to study it from scratch.After knowledge and structure engineering, the mannequin may work effectively. Nevertheless, deep studying fashions require a major quantity of computing energy, and it is a large problem for the crew as a result of we can not reap the benefits of probably the most highly effective graphics playing cards on battery-powered low-cost supply robots.Starship needs our deliveries to be low value which means our {hardware} have to be cheap. That’s the exact same cause why Starship doesn’t use LIDARs (a detection system which works on the precept of radar, however makes use of gentle from a laser) that may make understanding the world a lot simpler — however we don’t need our prospects paying greater than they should for supply.State-of-the-art object detection programs revealed in tutorial papers run round 5 frames per second [MaskRCNN], and real-time object detection papers don’t report charges considerably over 100 FPS [Light-Head R-CNN, tiny-YOLO, tiny-DSOD]. What’s extra, these numbers are reported on a single picture; nonetheless, we’d like a 360-degree understanding (the equal of processing roughly 5 single photos).To supply a perspective, Starship fashions run over 2000 FPS when measured on a consumer-grade GPU, and course of a full 360-degree panorama picture in a single ahead cross. That is equal to 10,000 FPS when processing 5 single photos with batch measurement 1.Neural networks are higher than people at many visible issues, though they nonetheless might include bugs. For instance, a bounding field could also be too large, the boldness too low, or an object is perhaps hallucinated in a spot that’s truly empty.Potential issues within the object detection module. How you can remedy these?Fixing these bugs is difficult.Neural networks are thought of to be black bins which can be laborious to investigate and comprehend. Nevertheless, to enhance the mannequin, engineers want to know the failure circumstances and dive deep into the specifics of what the mannequin has realized.The mannequin is represented by a set of weights, and one can visualize what every particular neuron is attempting to detect. For instance, the primary layers of Starship’s community activate to plain patterns like horizontal and vertical edges. The subsequent block of layers detect extra advanced textures, whereas larger layers detect automotive components and full objects.The way in which how the neural community we use in robots builds up the understanding of photos.Technical debt receives one other which means with machine studying fashions. The engineers repeatedly enhance the architectures, optimization processes and datasets. The mannequin turns into extra correct consequently. But, altering the detection mannequin to a greater one doesn’t essentially assure success in a robotic’s general behaviour.There are dozens of elements that use the output of the thing detection mannequin, every of which require a special precision and recall stage which can be set based mostly on the prevailing mannequin. Nevertheless, the brand new mannequin might act in another way in numerous methods. For instance, the output likelihood distribution may very well be biased to bigger values or be wider. Regardless that the common efficiency is healthier, it might be worse for a particular group like massive vehicles. To keep away from these hurdles, the crew calibrate the chances and test for regressions on a number of stratified knowledge units.Common efficiency doesn’t let you know the entire story of the mannequin.Monitoring trainable software program elements poses a special set of challenges in comparison with monitoring customary software program. Little concern is given concerning inference time or reminiscence utilization, as a result of these are principally fixed.Nevertheless, dataset shift turns into the first concern — the information distribution used to coach the mannequin is totally different from the one the place the mannequin is presently deployed.For instance, swiftly there could also be electrical scooters driving on the sidewalks. If the mannequin didn’t take this class under consideration, the mannequin can have a tough time appropriately classifying it. The data derived from the thing detection module will disagree with different sensory info, leading to requesting help from human operators and thus, slowing down deliveries.A serious concern in sensible machine studying — coaching and take a look at knowledge come from totally different distributions.Neural networks empower Starship robots to be protected on street crossings by avoiding obstacles like vehicles, and on sidewalks by understanding all of the totally different instructions that people and different obstacles can select to go.Starship robots obtain this by using cheap {hardware} that poses many engineering challenges however makes robotic deliveries a robust actuality as we speak. Starship’s robots are doing actual deliveries seven days every week in a number of cities around the globe, and it’s rewarding to see how our know-how is repeatedly bringing folks elevated comfort of their lives.

Sign in

Welcome! Log into your account

Forgot your password? Get help

Privacy Policy

Password recovery

Recover your password

A password will be e-mailed to you.