[ad_1]

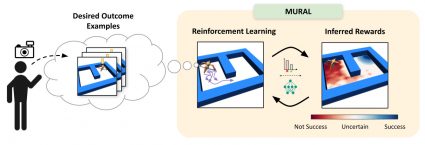

Diagram of MURAL, our methodology for studying uncertainty-aware rewards for RL. After the person supplies a number of examples of desired outcomes, MURAL robotically infers a reward perform that takes into consideration these examples and the agent’s uncertainty for every state.

Though reinforcement studying has proven success in domains corresponding to robotics, chip placement and enjoying video video games, it’s often intractable in its most common type. Particularly, deciding when and go to new states within the hopes of studying extra concerning the setting will be difficult, particularly when the reward sign is uninformative. These questions of reward specification and exploration are intently related — the extra directed and “effectively formed” a reward perform is, the simpler the issue of exploration turns into. The reply to the query of discover most successfully is prone to be intently knowledgeable by the actual alternative of how we specify rewards.

For unstructured downside settings corresponding to robotic manipulation and navigation — areas the place RL holds substantial promise for enabling higher real-world clever brokers — reward specification is commonly the important thing issue stopping us from tackling tougher duties. The problem of efficient reward specification is two-fold: we require reward features that may be laid out in the true world with out considerably instrumenting the setting, but in addition successfully information the agent to unravel tough exploration issues. In our latest work, we deal with this problem by designing a reward specification method that naturally incentivizes exploration and permits brokers to discover environments in a directed manner.

Consequence Pushed RL and Classifier Based mostly Rewards

Whereas RL in its most common type will be fairly tough to sort out, we will think about a extra managed set of subproblems that are extra tractable whereas nonetheless encompassing a big set of fascinating issues. Particularly, we think about a subclass of issues which has been known as end result pushed RL. In end result pushed RL issues, the agent shouldn’t be merely tasked with exploring the setting till it possibilities upon reward, however as an alternative is supplied with examples of profitable outcomes within the setting. These profitable outcomes can then be used to deduce an appropriate reward perform that may be optimized to unravel the specified issues in new situations.

Extra concretely, in end result pushed RL issues, a human supervisor first supplies a set of profitable end result examples , representing states during which the specified activity has been achieved. Given these end result examples, an appropriate reward perform will be inferred that encourages an agent to realize the specified end result examples. In some ways, this downside is analogous to that of inverse reinforcement studying, however solely requires examples of profitable states quite than full knowledgeable demonstrations.

When eager about really infer the specified reward perform from profitable end result examples , the best method that involves thoughts is to easily deal with the reward inference downside as a classification downside – “Is the present state a profitable end result or not?” Prior work has applied this instinct, inferring rewards by coaching a easy binary classifier to differentiate whether or not a selected state is a profitable end result or not, utilizing the set of offered aim states as positives, and all on-policy samples as negatives. The algorithm then assigns rewards to a selected state utilizing the success chances from the classifier. This has been proven to have an in depth connection to the framework of inverse reinforcement studying.

Classifier-based strategies present a way more intuitive strategy to specify desired outcomes, eradicating the necessity for hand-designed reward features or demonstrations:

These classifier-based strategies have achieved promising outcomes on robotics duties corresponding to cloth placement, mug pushing, bead and screw manipulation, and extra. Nonetheless, these successes are typically restricted to easy shorter-horizon duties, the place comparatively little exploration is required to search out the aim.

What’s Lacking?

Customary success classifiers in RL undergo from the important thing subject of overconfidence, which prevents them from offering helpful shaping for arduous exploration duties. To grasp why, let’s think about a toy 2D maze setting the place the agent should navigate in a zigzag path from the highest left to the underside proper nook. Throughout coaching, classifier-based strategies would label all on-policy states as negatives and user-provided end result examples as positives. A typical neural community classifier would simply assign success chances of 0 to all visited states, leading to uninformative rewards within the intermediate phases when the aim has not been reached.

Since such rewards wouldn’t be helpful for guiding the agent in any explicit route, prior works are likely to regularize their classifiers utilizing strategies like weight decay or mixup, which permit for extra easily growing rewards as we strategy the profitable end result states. Nonetheless, whereas this works on many shorter-horizon duties, such strategies can really produce very deceptive rewards. For instance, on the 2D maze, a regularized classifier would assign comparatively excessive rewards to states on the alternative facet of the wall from the true aim, since they’re near the aim in x-y area. This causes the agent to get caught in an area optima, by no means bothering to discover past the ultimate wall!

The truth is, that is precisely what occurs in observe:

Uncertainty-Conscious Rewards by CNML

As mentioned above, the important thing subject with unregularized success classifiers for RL is overconfidence — by instantly assigning rewards of 0 to all visited states, we shut off many paths which may ultimately result in the aim. Ideally, we wish our classifier to have an applicable notion of uncertainty when outputting success chances, in order that we will keep away from excessively low rewards with out affected by the deceptive native optima that outcome from regularization.

Conditional Normalized Most Probability (CNML)

One methodology notably well-suited for this activity is Conditional Normalized Most Probability (CNML). The idea of normalized most probability (NML) has sometimes been used within the Bayesian inference literature for mannequin choice, to implement the minimal description size precept. In newer work, NML has been tailored to the conditional setting to provide fashions which might be a lot better calibrated and keep a notion of uncertainty, whereas attaining optimum worst case classification remorse. Given the challenges of overconfidence described above, this is a perfect alternative for the issue of reward inference.

Slightly than merely coaching fashions by way of most probability, CNML performs a extra advanced inference process to provide likelihoods for any level that’s being queried for its label. Intuitively, CNML constructs a set of various most probability issues by labeling a selected question level with each attainable label worth that it’d take, then outputs a last prediction based mostly on how simply it was in a position to adapt to every of these proposed labels given the complete dataset noticed up to now. Given a selected question level , and a previous dataset , CNML solves ok totally different most probability issues and normalizes them to provide the specified label probability , the place represents the variety of attainable values that the label might take. Formally, given a mannequin , loss perform , coaching dataset with lessons , and a brand new question level , CNML solves the next most probability issues:

It then generates predictions for every of the lessons utilizing their corresponding fashions, and normalizes the outcomes for its last output:

Comparability of outputs from a typical classifier and a CNML classifier. CNML outputs extra conservative predictions on factors which might be removed from the coaching distribution, indicating uncertainty about these factors’ true outputs. (Credit score: Aurick Zhou, BAIR Weblog)

Intuitively, if the question level is farther from the unique coaching distribution represented by D, CNML will be capable to extra simply adapt to any arbitrary label in , making the ensuing predictions nearer to uniform. On this manner, CNML is ready to produce higher calibrated predictions, and keep a transparent notion of uncertainty based mostly on which knowledge level is being queried.

Leveraging CNML-based classifiers for Reward Inference

Given the above background on CNML as a way to provide higher calibrated classifiers, it turns into clear that this supplies us a simple strategy to deal with the overconfidence downside with classifier based mostly rewards in end result pushed RL. By changing a typical most probability classifier with one skilled utilizing CNML, we’re in a position to seize a notion of uncertainty and acquire directed exploration for end result pushed RL. The truth is, within the discrete case, CNML corresponds to imposing a uniform prior on the output area — in an RL setting, that is equal to utilizing a count-based exploration bonus because the reward perform. This seems to present us a really applicable notion of uncertainty within the rewards, and solves lots of the exploration challenges current in classifier based mostly RL.

Nonetheless, we don’t often function within the discrete case. Most often, we use expressive perform approximators and the ensuing representations of various states on this planet share similarities. When a CNML based mostly classifier is realized on this situation, with expressive perform approximation, we see that it might probably present extra than simply activity agnostic exploration. The truth is, it might probably present a directed notion of reward shaping, which guides an agent in the direction of the aim quite than merely encouraging it to broaden the visited area naively. As visualized under, CNML encourages exploration by giving optimistic success chances in less-visited areas, whereas additionally offering higher shaping in the direction of the aim.

As we’ll present in our experimental outcomes, this instinct scales to increased dimensional issues and extra advanced state and motion areas, enabling CNML based mostly rewards to unravel considerably tougher duties than is feasible with typical classifier based mostly rewards.

Nonetheless, on nearer inspection of the CNML process, a serious problem turns into obvious. Every time a question is made to the CNML classifier, totally different most probability issues must be solved to convergence, then normalized to provide the specified probability. As the scale of the dataset will increase, because it naturally does in reinforcement studying, this turns into a prohibitively sluggish course of. The truth is, as seen in Desk 1, RL with commonplace CNML based mostly rewards takes round 4 hours to coach a single epoch (1000 timesteps). Following this process blindly would take over a month to coach a single RL agent, necessitating a extra time environment friendly answer. That is the place we discover meta-learning to be an important instrument.

Meta-learning is a instrument that has seen a number of use instances in few-shot studying for picture classification, studying faster optimizers and even studying extra environment friendly RL algorithms. In essence, the thought behind meta-learning is to leverage a set of “meta-training” duties to be taught a mannequin (and infrequently an adaptation process) that may in a short time adapt to a brand new activity drawn from the identical distribution of issues.

Meta-learning methods are notably effectively suited to our class of computational issues because it entails shortly fixing a number of totally different most probability issues to judge the CNML probability. Every the utmost probability issues share important similarities with one another, enabling a meta-learning algorithm to in a short time adapt to provide options for every particular person downside. In doing so, meta-learning supplies us an efficient instrument for producing estimates of normalized most probability considerably extra shortly than attainable earlier than.

The instinct behind apply meta-learning to the CNML (meta-NML) will be understood by the graphic above. For a data-set of factors, meta-NML would first assemble duties, equivalent to the constructive and destructive most probability issues for every datapoint within the dataset. Given these constructed duties as a (meta) coaching set, a meta–studying algorithm will be utilized to be taught a mannequin that may in a short time be tailored to provide options to any of those most probability issues. Geared up with this scheme to in a short time remedy most probability issues, producing CNML predictions round x sooner than attainable earlier than. Prior work studied this downside from a Bayesian strategy, however we discovered that it typically scales poorly for the issues we thought-about.

Geared up with a instrument for effectively producing predictions from the CNML distribution, we will now return to the aim of fixing outcome-driven RL with uncertainty conscious classifiers, leading to an algorithm we name MURAL.

To extra successfully remedy end result pushed RL issues, we incorporate meta-NML into the usual classifier based mostly process as follows: After every epoch of RL, we pattern a batch of factors from the replay buffer and use them to assemble meta-tasks. We then run iteration of meta-training on our mannequin.We assign rewards utilizing NML, the place the NML outputs are approximated utilizing just one gradient step for every enter level.

The ensuing algorithm, which we name MURAL, replaces the classifier portion of normal classifier-based RL algorithms with a meta-NML mannequin as an alternative. Though meta-NML can solely consider enter factors one after the other as an alternative of in batches, it’s considerably sooner than naive CNML, and MURAL continues to be comparable in runtime to straightforward classifier-based RL, as proven in Desk 1 under.

Desk 1. Runtimes for a single epoch of RL on the 2D maze activity.

We consider MURAL on quite a lot of navigation and robotic manipulation duties, which current a number of challenges together with native optima and tough exploration. MURAL solves all of those duties efficiently, outperforming prior classifier-based strategies in addition to commonplace RL with exploration bonuses.

Visualization of behaviors realized by MURAL. MURAL is ready to carry out quite a lot of behaviors in navigation and manipulation duties, inferring rewards from end result examples.

Quantitative comparability of MURAL to baselines. MURAL is ready to outperform baselines which carry out task-agnostic exploration, commonplace most probability classifiers.

This means that utilizing meta-NML based mostly classifiers for end result pushed RL supplies us an efficient manner to supply rewards for RL issues, offering advantages each by way of exploration and directed reward shaping.

Takeaways

In conclusion, we confirmed how end result pushed RL can outline a category of extra tractable RL issues. Customary strategies utilizing classifiers can typically fall brief in these settings as they’re unable to supply any advantages of exploration or steerage in the direction of the aim. Leveraging a scheme for coaching uncertainty conscious classifiers by way of conditional normalized most probability permits us to extra successfully remedy this downside, offering advantages by way of exploration and reward shaping in the direction of profitable outcomes. The overall rules outlined on this work recommend that contemplating tractable approximations to the overall RL downside might permit us to simplify the problem of reward specification and exploration in RL whereas nonetheless encompassing a wealthy class of management issues.

This submit is predicated on the paper “MURAL: Meta-Studying Uncertainty-Conscious Rewards for Consequence-Pushed Reinforcement Studying”, which was offered at ICML 2021. You may see outcomes on our web site, and we offer code to breed our experiments.

tags: c-Analysis-Innovation

BAIR Weblog

is the official weblog of the Berkeley Synthetic Intelligence Analysis (BAIR) Lab.

BAIR Weblog

is the official weblog of the Berkeley Synthetic Intelligence Analysis (BAIR) Lab.

[ad_2]